A simple Python project to test PySpark algrothms for Data Vault transformations on Spark.

System Requirements

To start development of the project. Create a local development environment.

$ cd pyspark-datavault

$ conda create -p ./env python=3.8

$ conda activate ./env

$ poetry installTo run the tests of the project, execute the following command from project root.

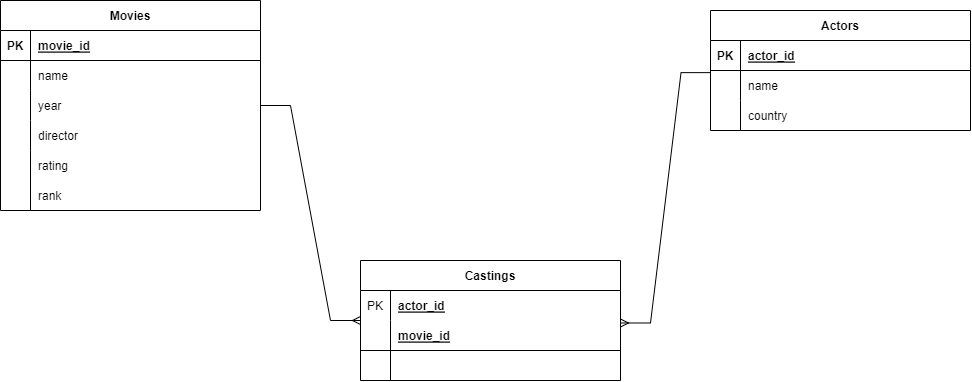

$ python -m pytest testsRelational Schema

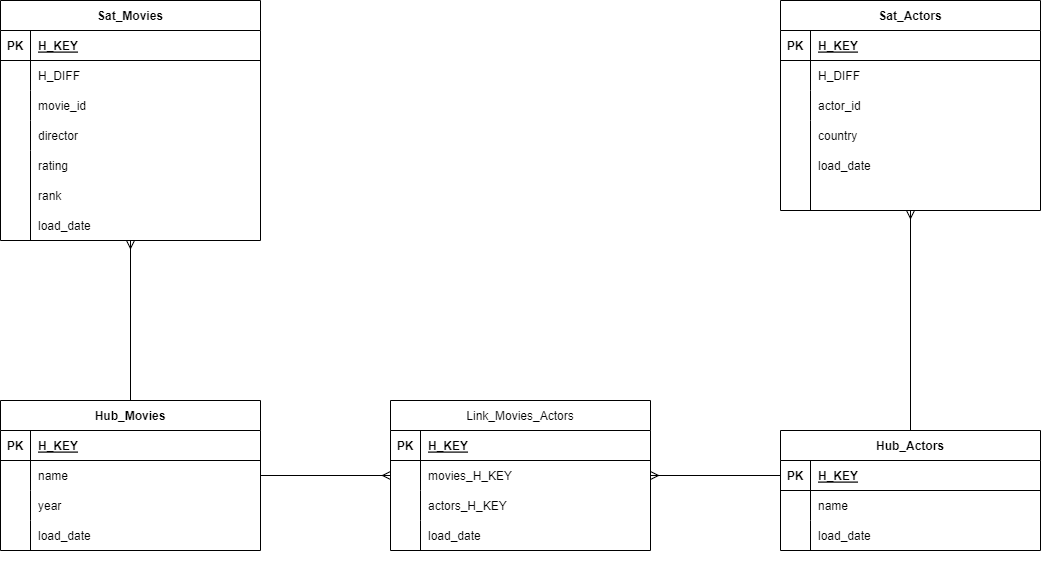

Data Vault Schema